In this paper, we reflect on the implementation of a gamified application for helping students learn important facts about their study program. We focus on two design features, of which different configurations were tested in a field experiment among Dutch university students (N = 101). The first feature is feedback, which is expected to increase engagement, with personalized (“tailored”) feedback being more effective than generic feedback. The second feature is a session limit that was designed to prevent users from “binging” the game, because this could prevent deep learning. Results showed that generic feedback was more effective than tailored feedback, contrasting our expectations. The session limit, however, did prevent binging without reducing the overall number of sessions played. Our findings suggest that careful consideration of game properties may impact sustaining and encouraging play via a gamified application.

Studying a foreign language doesn’t just improve your writing and speaking in that language; it improves English skills by drawing “your focus to the mechanics of language.” Second language learners must be attentive listeners and carry this skill over to their daily activities, too. Gamification is a new field, but games are ancient. In this first module, we'll look at what gamification is, why organizations are applying it, and where it comes from. While there isn’t universal agreement on the scope of the field, a set of concepts are clearly representative of gamification.

New technologies offer exciting opportunities to engage student learning in new ways. One of the new-technology potentials for motivating students to learn is gamification, which can be defined as “the use of game-design elements in non-game contexts” (Deterding et al., 2011: 9). In the past decade, the popularity of gamification increased rapidly, and various cases are known in which businesses, web designers, and education workers used gamification in order to engage and motivate a target group with successful outcomes (Chou, 2017; Mollick and Rothbard, 2014). However, more systematic research is needed to know when and how gamification can be used to the greatest benefit in an education setting. Many different gamification options exist and usage varies widely, which we briefly review in the next sections to contextualize our study.

The vast interest in gamification instigated a wide array of studies across many different topics, audiences, and disciplines. For instance, a 2012 literature review found >125 empirical studies examining effects of gamification in a variety of contexts (Connolly et al., 2012). By contrast, a more recent systematic review that only searched for gamification in the context of education found only 15 studies on some aspect of gamification in an education setting (Nah et al., 2014). This latter review, focusing specifically on the education context, indicates that feedback seems to be a potentially useful mechanism for increasing engagement among students for a specific course or learning outcome. However, research on feedback in other contexts shows that the effectiveness of feedback is not only dependent on the verbal feedback itself, but also on situational factors (e.g. Burgers et al., 2015; Hatala et al., 2014; Kluger and DeNisi, 1996). One of the aspects of feedback that is often associated with effectiveness is the use of personalized (or “tailored”) feedback (e.g. Krebs et al., 2010; Lustria et al., 2013). Thus, we studied whether personalized feedback also outperforms non-personalized (“generic”) feedback in stimulating play and learning in the context of gamified learning tools for education.

Second, gamification for learning can only be successful when students play for an extended amount of time, such that they process enough (new) information for learning to take place. In addition, for learning a new skill or knowledge, literature shows that distributed practice enhances learning (for a review, see Dunlosky et al., 2013). This means that it is more effective to spend the time learning spread over short time intervals over several days rather than in one long session (see also Heidt et al., 2016; Rohrer, 2015). However, in some contexts, players choose to “binge” an entire game over one longer period of time rather than spacing out play over several short sessions. Thus, we tested whether “enforcing” distributed learning through imposing a daily session limit positively impacts play and learning or whether it backfires.

To answer these questions, the current study investigated the potential use of gamification for engaging university students with an online learning platform at a Dutch university. A gamified application, in the form of a multiple-choice quiz app, was launched among undergraduate students in a Faculty of Social Sciences. The purpose of the app was to teach students relevant information about various aspects of student rules, regulations, and socials in university life, ranging from exam regulations to social events at campus. Fitz-Walter et al. (2011: 123) found that “new students often feel lost, having trouble meeting new friends and finding what services and events are available on campus” and argue that games can help alleviate this. Accordingly, our game was tailored toward new and existing students who may not yet be aware of specific campus services or may be disinclined to browse or search through the university website. The gamified application was a modified version of a popular online quiz game which rewards users for playing and correctly answering questions about specific topics. The game aspects include points, rewards, and feedback from the governing system. Our content was identical to content available, at various places, on the university website, but brought together in this game and thus presented within this novel learning environment. To introduce the app, we sent out emails, presented the app in classes, and put up posters. Students could participate by following a link to a short survey where they could enter basic personal information and register for using the app.

We hypothesized that learning engagement, in the form of persistent play, would be highest for players who (a) received tailored feedback and (b) had a session limit to enforce distributed learning. In order to test our hypotheses, we created experimental conditions in which we tailored feedback within the app for some participants but not others, limited play lengths for some but not others, in addition to tracking user data for all participants, including surveying participation and engagement. The results of tracking and survey data (e.g. the interest of students in the app) can provide lessons for future designs and launch campaigns in university settings. The following sections elaborate on the background of gamification and the distributed practice in the field of education to further specify the hypotheses that guided our research.

Despite its current popularity, the term “gamification” is still young. Its first documented use is often credited to Brett Terill (2008), who talked about “gamification” in a blog post to define the act of “taking game mechanics and applying them to other web properties to increase engagement.” In its current usage, the concept of gamification is not restricted to web properties, but more generally refers to “the use of game design elements in non-game contexts” (Deterding et al., 2011: 9) or “using game-based mechanics, aesthetics and game thinking to engage people, motivate action, promote learning, and solve problems” (Kapp, 2012: 10). In the current paper, we focused on the properties of gamification that can be used to stimulate learning.

Gamification is related, but not identical, to the concept of game-based learning. Where gamification is about the use of game design elements in a non-game context, game-based learning refers to the use of actual games to acquire skills or knowledge. In game-based learning, the skills that are put to the test in the game correspond to the learning task (Gee, 2013), as is for instance the case in a game where medical students or personnel perform surgical procedures in a simulated environment (Kapp, 2012).

For certain cases, such as the multiple-choice quiz app used in the current study, the distinction between a gamified experience and game-based learning can be blurred. Cheong et al. (2013: 207) argue that gamification “can be viewed as a continuum ranging from serious games at one end of the spectrum to normal activities to which game elements have been added at the other end of the spectrum.” The gamified multiple-choice quiz app falls in the middle of this spectrum. The simple learning task of memorizing a list of facts is made into a game through the presentation of facts in a quiz format. However, in itself, this quiz format still lacks important game elements, such as progression, rewards, and competition. To add these elements, tested gamification mechanics have been implemented in the app, such as avatars, experience points, and badges. Accordingly, the application can more accurately be classified as a gamified multiple-choice quiz, similar to the application used by Cheong et al. (2013), rather than as a dedicated game for learning.

Although gamification for learning and game-based learning are two different concepts, they share common ground on the idea that game elements can make learning experiences more engaging. Accordingly, research into whether and why certain game elements are effective in games for learning, and in games in general, is relevant for understanding the efficacy of gamification. Academic interest in effectively using game design elements dates back at least 30 years to Malone (1982), who studied the appealing features of computer games for the purpose of using these features to make user interfaces more interesting and enjoyable. Sweetser and Wyeth (2005) contributed greatly to our understanding of the features that make games enjoyable by developing a scale to measure game enjoyment, although they did not elaborate on the use of these features in a non-game context. Building on their work, Fu et al. (2009) developed a scale to measure the enjoyment of people playing educational games. Cozar-Gutierrez and Saez-Lopez (2016) recently reported that teachers’ interest in and perceived innovativeness of using games in the classroom is strong, showing a desire to understand the best practices in incorporating games and gamified education in the classroom.

Despite academic interest in understanding and using the appealing features of games, academia has been slow to react to the surge of gamification projects in businesses and on the Internet (Huotari and Hamari, 2012). Initial support for the efficacy of gamification mainly came from businesses, where the idea that tasks can be made more efficient and engaging by wrapping them in game design elements rapidly gained popularity. Yu-Kai Chou, an influential gamification expert, collected and published a list of 95 documented gamification cases, based on the criterion that the documentation reports return on investment indicators (Chou, 2017). Overall, these cases show that gamification can indeed have a strong, positive impact on engagement and performance in various activities. Although it is not reported how these cases were selected, and there could very well be a bias toward successful cases, this adds weight to the claim that gamification can work, given the right context and implementation.

A recent literature study of academic gamification research found that most studies on the subject verified that gamification can work, even though effects differ across contexts (Hamari et al., 2014). By context, the authors refer to the type of activity being gamified, such as exercise, sustainable consumption, monitoring, or education. In the context of education—which is the focus of the current study—the outcomes of gamification were mostly found to be positive, as seen in the forms of increased motivation, engagement, and enjoyment (e.g. Cheong et al., 2013; Denny, 2013; Dong et al., 2012; Li et al., 2012).

Another literature study that focused broadly on the effects of games but also discussed games for learning in particular found that “players seem to like the game-based approach to learning and find it motivating and enjoyable” (Connolly et al., 2012: 671). Yet, they also argue that the motivational features of learning oriented games should be examined in more detail and note that evidence for more effective learning was not strong.

In all, these studies concluded that games have the potential to be useful tools for learning, but stress that their efficacy depends heavily on the use of different game features and how they are implemented (Connolly et al., 2012; Hamari et al., 2014). Features such as feedback options and the way in which the level of difficulty adapts to a player’s skills can be critical to a game’s success and need to be investigated in more detail. For example, Barata et al. (2015) report success in clustering student types based on learning performance in a gamified engineering course. Over two years, by targeting student groups who responded differently to the learning environment, Barata et al. (2015) were able to reduce underperforming students from 40% of the class to 25% of the class. Therefore, by targeting learning and interventions to particular types of players, the outcomes of gamified applications in higher education can be improved.

The efficacy of gamifying learning through feedback

Lee and Hammer (2011) argue that feedback is central to the potential of gamification. First, to make a person feel that they are successfully improving and heading toward a goal, games can provide explicit feedback to show this progress. Studies indicate that even simple, virtual reward systems such as experience points and badges can increase the engagement of players (Denny, 2013; Fitz-Walter et al., 2011). For instance, Hatala et al. (2014) conducted a meta-analysis to investigate if feedback positively impacted learning of procedural skills in medical education. Their results demonstrate that providing feedback moderately enhances learning. In addition, they found that terminal feedback (i.e. feedback given at the end of the learning activity) was more effective than concurrent feedback (i.e. feedback given during the learning activity). These analyses point toward the effectiveness of using feedback as a mechanism to enhance learning. Thus, for the current study, we expect a similar pattern leading to:

H1. Students receiving feedback play more sessions of the gamified app compared to students receiving no feedback.

However, not all feedback is equally effective in achieving its goal (e.g. Burgers et al., 2015; Kluger and DeNisi, 1996). For instance, one study shows that the effectiveness of negative feedback (i.e. feedback emphasizing the elements that could be improved upon) and positive feedback (i.e. feedback emphasizing the elements that went well during an activity) depends on the task at hand (Burgers et al., 2015). Negative feedback was more effective than positive feedback when the problem could immediately be repaired (e.g. in the case of a game which enables a new session to be started immediately). By contrast, positive feedback was more effective than negative feedback when repair was delayed (e.g. in the case of a game which only enables one session per specified time period). Thus, when using feedback, it is important to match the specific type of feedback to the specific task at hand.

One type of feedback which has been associated with enhancing effectiveness is the use of tailored feedback over generic feedback (e.g. De Vries et al., 2008; Krebs et al., 2010). In tailored feedback, the specific content is personalized (“tailored”) to the individual, through mechanisms like personalization (i.e. addressing the receiver by name) or by adapting the feedback to their individual performance (e.g. by including descriptive statistics that refer to the receiver’s personal performance). By contrast, generic feedback is similar for all addressees receiving the feedback. Tailored messaging may take the form of, for example, frequent prompt or reminder emails (Neff and Fry, 2009), often edited (or tailored) to include information specific to particular participants (Schneider et al., 2013). While simple interventions such as emails can increase participants’ logging into online systems, tailored information can further increase desired behaviors in specific cases (Krebs et al., 2010; Neff and Fry, 2009).

Nevertheless, other studies show different results (e.g. Kroeze et al., 2006; Noble et al., 2015). For instance, a systematic review by Kroeze et al. (2006) demonstrates that the effectiveness of tailoring depends on the specific kind of behavior targeted. For instance, for 11 out of 14 interventions targeting fat reduction, the authors found positive effects of tailoring over a generic intervention. By contrast, for only 3 out of 11 interventions targeting physical education, did the authors find such positive effects of tailoring. Thus, the question whether or not tailoring improves effectiveness may also be dependent on contextual factors like the targeted behavior. In the current study, we aim to motivate students to continue using a gamified app to increase learning. For this specific context, we do not yet have information on whether tailoring is an effective strategy or not. Yet, given that, across behaviors, tailoring typically boosts performance compared to generic information (e.g. Krebs et al., 2010), we expect that:

H2. Students receiving tailored feedback play more sessions of the gamified app compared to students receiving generic feedback.

In pilot studies of the application used in this study, it was observed that some players tended to binge play. Within a matter of days, they would play so many sessions that they quickly learned the answers to most questions. Although this can be considered as a success in terms of engagement, it can actually be harmful for long-term recall. One of the challenges for gamification in a learning task—and more so for games for learning—is thus not to create as much engagement as possible, but to create the right type and amount of engagement to best achieve the learning goal.

One of the goals of any education intervention is to stimulate deep learning, which means that students retain the most important information, even when the education intervention has been completed. In that light, many studies highlight the positive aspects of distributed practice. This means that students spread (“distribute”) their learning and practice activities over a number of relatively short time intervals, as compared to cramming all learning in one long session. For instance, a recent study by Heidt and colleagues (2016) contrasted two versions of a training program meant to teach students how to conduct a specific type of police interview. One of the versions contained a single session of two hours containing all information, while the other version spaced the program into two one-hour sessions (i.e. distributed learning). Results demonstrated that, on average, participants in the distributed-learning condition performed better in that they asked more open questions and were able to elicit more detailed information through these open questions. This suggests that distributed learning could positively enhance training outcomes.

This study by Heidt and colleagues (2016) is not the only one that shows these advantages of distributed learning. A review by Dunslosky and colleagues (2013) argues that distributed practice is one of the best researched topics in the field of education studies. Typically, distributed practice focuses on two elements, referring to the spacing of activities (i.e. the number of learning activities planned to cover all materials) and the time lag between activities. The review by Dunlosky et al. (2013) demonstrated that distributed practice enhances learning across a variety of learning contexts. Thus, the literature suggests that it is better to spread out learning over several sessions instead of concentrating it in one large binge.

Many studies on distributed practice focus on specific training programs with set dates for education activities (such as the interview-training sessions described by Heidt and colleagues, 2016). Gamification interventions offer the technological possibilities of also stimulating distributed learning on an individual basis through computer design. One way to do this is by imposing a daily limit on players. Such a daily limit prevents users from gorging on all content in one large binge and may instead stimulate players to return to the content on later dates, thus encouraging distributed practice. However, an important condition for this feature is that it should not reduce the overall amount of sessions played. We test whether this effect can be achieved through gamification, formulating the following hypothesis:

H3. Students with a daily limit play an equal amount of sessions on more different days compared to students without a daily limit in playing the gamified app.

To motivate a person to achieve their best performance, games can be designed to interactively increase the difficulty of an activity to match the player’s growth in skill (Barata et al., 2015; Garris et al., 2002; Malone and Lepper, 1987). Ensuring the right level of challenge can, however, be difficult depending on the goal of an educational or serious game. In the current study, the goal of the app is knowledge acquisition—letting students learn a list of facts. For this type of learning task, it is not always possible to implement progressive difficulty levels. In our case, the facts are mostly orthogonal, in the sense that there is no overarching skill that can be learned, as would for instance be the case for math or language acquisition tasks. Players thus either know the answer to a question or they do not, and when posed a novel question, players have no previous skill to rely on. This complicates the goal of keeping players perform at their best.

Still, it is possible to influence how well players perform based on what questions are asked. If players perform very well, to the point where they might experience the activity to be too easy, one might next present more difficult questions such as those that the player has not yet answered before in previous rounds. Conversely, if a player is performing poorly, one might present easy questions, for example, questions that the player had already answered correctly in previous rounds. This interactive selection of questions is a distinct advantage compared to textbook learning. Furthermore, this is where an important potential lies for client-server infrastructures. Many contemporary applications connect to servers, making it possible for the host of a game to monitor and aggregate playing results. This global-level information can be used to optimize the question selection algorithm, for instance by determining how difficult questions are based on average performance.

At present, there does not appear to be any empirical support that player performance on a quiz affects prolonged play. In the current study, we test this assumption by analyzing to what extent prolonged play can be predicted by a player’s recent performance. Based on the theory that the level of challenge should be neither too low nor too high in view of an individual’s mastery of the task (Csikszentmihalyi, 1990; Garris et al., 2002; Giora et al., 2004), we expect that players get demotivated if the task is too easy or too difficult. This implies that the effect of recent performance on prolonged play should form an inverted u-curve, with most playing at the level of “optimal innovation” (Giora et al., 2004) when the task is neither too easy nor too difficult. Thus, we hypothesize that:

H4. The relation between performance and prolonged play follows an inverted u-curve, with prolonged play decreasing if players perform (a) very poorly, and (b) very well.

The study was conducted within two periods in the Netherlands at a Faculty of Social Sciences of a public university between March and April 2016 (period 1; including all students of the School of Social Sciences) and October to December 2016 (period 2; only including freshmen students of the same Faculty of Social Sciences). We informed students of the app by sending out emails, putting up posters, and presenting the app in lectures. To participate, students had to fill out a one-minute online introductory survey that could be completed on a mobile phone. The link to the survey was distributed per email using a shortened link and made accessible as a QR-code. After completion, students were automatically assigned to conditions, and within 24 hours received an invitation to use the app. The app itself could be downloaded for free from official app stores such as Google Play, for which students were given directions in the campaign material and at the end of the brief introductory survey.

In total, 2444 students were contacted in period 1 and 706 students were contacted in period 2 (grand total: 3150 students). Of all students contacted, 307 (9.8%) completed the survey, of which 223 (9.1%) in the first period and 84 (11.9%) in the second period. One hundred and one students (3.2%) finished all steps of the registration, of which 70 (2.9%) in the first period and 31 (4.4%) in the second period.

Table 1 contains the descriptive statistics of the participants. Overall, there were slightly more female participants (65.3%) than male, and most participants were in their early twenties (M = 22.53; SD = 3.51). As expected, first-year students in the bachelor and premaster were generally more interested in the app as the acquired information is more new and relevant to them (e.g. details of exam regulations; see above). The over-representation of the Communication Science and Culture, Organization, and Management students is proportionate to the relatively high number of students in these programs. Furthermore, students were asked to judge their prior knowledge about the university on a five-point scale, which showed that on average, students considered their knowledge to be between neutral and good (M = 3.61; SD = 0.77). Notably, the 206 students that did fill in the survey but did not use the app did not score any different (M = 3.61; SD = 0.76), indicating that prior knowledge was not a relevant factor in their decision not to participate.

The experiment had a 2 (daily limit: present vs. absent) × 3 (no feedback, generic feedback, personalized feedback) between-subjects experimental design. Participants in the condition with a daily limit were limited in their play to four sessions per day. Participants in the condition without a daily limit could play as many sessions per day as they wanted. Participants were randomly assigned to the six conditions.

Participants in the feedback conditions received a weekly email on Monday for three consecutive weeks that encouraged them to play (if they did not play that week) or to play more. The difference between the generic and personalized feedback conditions was limited to the information provided in the email. Participants in the generic-feedback condition were not addressed by name, and the email only reported whether or not they played in the previous week. Participants in the personalized-feedback condition were addressed by their first name, and the email reported the exact number of sessions they played in the previous week. The encouragement message also changed depending on how many sessions were played. The personalized feedback condition deliberately did not include additional support, such as offering tips or replying to specific questions that the participant answered incorrectly. The condition thereby focuses purely on whether the participant was addressed as a generic and anonymous user versus as an individual that is personally monitored. The effect investigated in this study is thus only a communication effect and not an effect of offering a different learning experience.

The number of participants per condition is reported in Table 2. The distribution of participants is not perfectly balanced, because not all students who completed the survey (upon which they were assigned to a condition) actually participated.

The application used for this study, Knowingo (https://knowingo.com/), was developed by a partner company. It is normally licensed to businesses that use it as a tool to disseminate factual knowledge throughout their organizations. Traditionally, learning this type of knowledge would require employees to study non-interactive documents. The purpose of Knowingo is to make this type of knowledge acquisition more engaging and efficient by presenting the learning task as a multiple-choice quiz.

By itself, a simple multiple-choice quiz still lacks many important game elements. To make the application more engaging, Knowingo therefore incorporates various tested gamification features. The first time players log in, they need to choose an avatar that is visible to themselves and other players whom they can challenge. By playing, and by giving the right answers, players receive experience points to grow in levels and unlock virtual rewards. Each day players also receive new quests, such as playing for streaks of correct answers, that give additional experience and rewards.

Furthermore, the game algorithm has been designed to make users play short consecutive sessions. Sessions consist of seven questions, and each question has a time limit. To prevent users from getting bored or frustrated, the selection of questions takes the session history of users into account. If players are performing poorly, they can be given more easy questions or questions that they already answered before to boost their score, and if players have perfect scores, they are more likely to receive new and difficult questions. One of the development goals of the application is to optimize this question selection algorithm by using the client-server infrastructure to collect information from all users, in order to learn the difficulty of different questions and use this to provide an adaptive learning experience. The version used in this study does not yet implement this adaptive learning experience.

For the current study, the Knowingo app was used to help students learn relevant information about their university, ranging from exam regulations to social events. We developed 200 unique multiple choice questions, each with four possible answers, to ensure that there would be enough content to prevent users from getting the same questions too often. For example, some of the questions were: “what does ECTS stand for?,” “what must you always bring to an exam?,” “who can help you with course registration issues?,” and “when is the pub quiz in the [campus cafe]?”

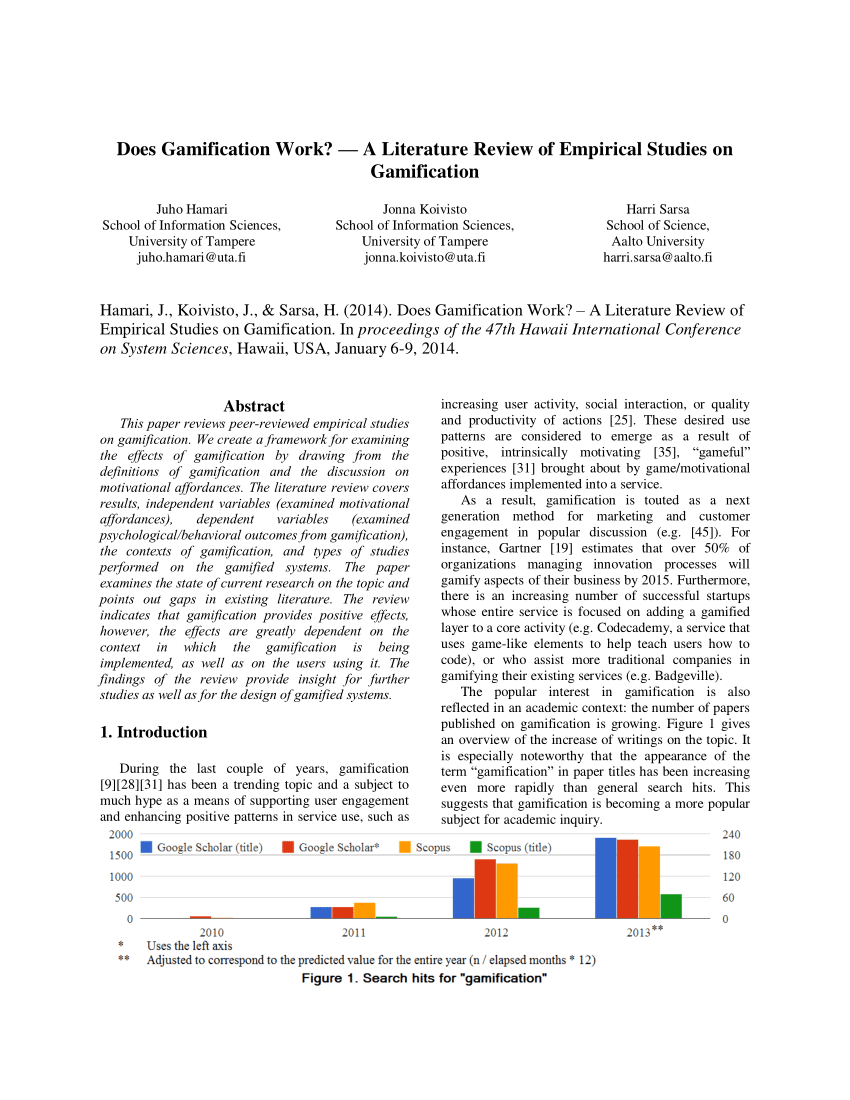

To test the hypotheses about the effects of feedback on player participation, we used regression analysis to explain the variance in the number of sessions played by each participant. Figure 1 shows the distribution of this dependent variable. Note that this is a heavily over-dispersed count variable: most participants only played one or a few sessions, but there are also several players that continued for more than 200 sessions. Accordingly, we used a negative binomial generalized linear model. Results are presented in Table 3. Contrary to our hypothesis, we did not find a significant effect of feedback messages in general on the number of sessions played (b = 0.449, p = 0.310). Therefore, we reject H1.

Figure 1. Histogram of number of sessions (logarithmic scale) per player (N = 101).

|

Table 3. Negative binomial regression predicting the number of sessions played per user.

Our second hypothesis was that the tailored feedback messages have a stronger effect on the number of sessions played than the generic feedback messages—or, given that we did not find a general effect of feedback, that there would be an effect for tailored feedback. However, our results showed that tailored feedback messages did not have an effect (b = –0.118, p = 0.341), but rather the other way around. The number of sessions played is significantly higher for participants in the generic feedback condition (b = 0.821, p < 0.05). Additionally, male participants tend to play more sessions compared to female participants (b = 0.924, p < 0.01), and older participants tend to play less sessions (b = –0.102, p < 0.05).

Our third hypothesis concerns the impact of a daily limit, where we hypothesized that students with a daily limit play a similar amount of sessions on more different days compared to students without a daily limit. To test this, we ran a negative binomial regression analysis with the unique number of days played as the dependent variable. For this analysis, we only included participants that at least once played four or more sessions on one day (n = 35), since the other participants would not have experienced an effect of the daily limit. To account for the small sample size, we only included two independent variables: the daily limit condition (dichotomous) controlled for the number of sessions played. The results, as presented in Table 4, show that participants in the daily-limit condition indeed played on more unique days (b = 0.620, p < 0.01). Based on these results, we can accept H3a.

|

Table 4. Negative binomial regression predicting the number of unique days played by users.

Playing on more different days, however, would not be beneficial to learning if the overall number of sessions played suffers from the daily limit condition. Our results showed that participants in the daily limit condition—who could only play four sessions each day—did not play less sessions overall (M = 66.44, Mdn = 17.5) compared to participants without a daily limit (M = 41.29, Mdn = 22). The nonparametric Mann–Whitney–Wilcoxon test shows that the difference is not significant (W = 156.5, p = 0.921). This supports H3b. This indicates that participants in the daily limit condition were not less motivated than those without a daily limit, but rather spread out their sessions over more different days—which is the intended effect of this feature.

To investigate whether a player’s experience of difficulty affects their motivation to play (H4), we analyzed whether their performance in each individual session affects the probability to continue playing. For this analysis, we used a multilevel logistic regression analysis with random intercepts, where cases are individual sessions that are nested in participants. The dependent variable, prolonged play, indicated whether participants played a new session within 15 minutes after finishing the current session. Our independent variable of interest is session performance, which indicates how many of the seven questions in the current session were answered correctly. We control for the participants’ streak, which is the number of times participants already continued playing (with 15-minute intervals). We also control for the daily limit condition and its interaction with one’s streak, since participants in this condition cannot continue playing after four sessions.

Results are presented in Table 5, which shows a negative effect of performance on prolonged play (b = –0.134, p < 0.05). This indicates that participants might indeed lose motivation or become bored the more they perform above their average. This is in line with H4 that there is an inverted u-curve relation between performance and prolonged play. However, we did not find any indications that participants also lose motivation if they are performing below their average.1 This is likely related to a ceiling effect in performance: on average, 5.6 out of 7 questions were answered correctly (SD = 1.30). This suggests that for many students, the questions in the current application might not have been challenging enough. Based on these results, we conclude that H4a is not supported, but H4b is supported.

|

Table 5. Logistic regression analysis, with random intercepts for users, predicting prolonged play.

Ela Gamification

This study investigated the use of a quiz app designed to support university students to acquire essential information about how their university functions and analyzed how manipulating game features and feedback can enhance engagement and learning. The first part of our study examined the effect of feedback on player participation. No differences were found between the feedback conditions and the no feedback condition. However, a closer inspection of the generic versus personalized (“tailored”) feedback conditions revealed that generic feedback does have a positive effect on player participation, whereas this could not be established for the personalized feedback. Personalized feedback, which included personally identifiable information (e.g. the number of sessions one had played thus far), might possibly induce a boomerang effect (Wattal et al., 2012). This is an important topic for future studies, which could focus on whether the amount and type of personalization makes a difference. In particular, a potential explanation that requires inquiry is that it could matter whether personalization has a clear benefit to the user. In our study, the personalized feedback condition deliberately did not receive additional help or benefits. More personalization might not induce a boomerang effect if it is clear to benefit the user, such as feedback on specific answers or links with more information.

While not a prominent focus of this study, it is interesting to note that player participation was also affected by player demographics. Male and older students tended to play more sessions compared to female and younger students, respectively. Understanding the effects of player demographics is important for effective use of gamification, because it can inform the development of applications with specific audiences in mind. However, we recommend caution in generalizing our results in this regard. Prior research shows mixed findings, for instance, in gender effects for both engagement and learning outcomes (Khan et al., 2017; Su and Cheng, 2015). Current research into gamification is rather diverse, with different types of applications built for different learning goals, which makes it difficult to draw conclusions on effects of social and cultural factors. A meta-analysis of demographic factors in gamification would be a welcome contribution to the field.

The second part of the study investigated whether introducing a daily limit on the number of sessions a participant can play per day can promote distributed learning. A daily limit feature can prevent people from binge playing a game, but a concern is that users will simply play less sessions, rather than maintain their interest over a longer period of time. Findings of this study show that participants in the daily limit condition indeed played on more different days compared to participants that could “binge” as many sessions as they wanted, while playing a similar amount of sessions. So, it seems the daily limit did not demotivate them and spread out the learning experience over more sessions. This suggests that including a daily limit in the gamified app in an education setting can be a useful tool to prevent binge playing and enhance distributed learning.

Finally, we investigated whether a participant’s performance in the game affects prolonged play. Results show that if participants perform very well (in the current study often having a perfect score), they become less likely to continue playing, which confirms the importance of ensuring that participants are sufficiently challenged. For a multiple-choice quiz about mostly independent facts, it can be difficult to manipulate the difficulty of the learning task. However, it is possible to estimate the likelihood that a participant answers a question correctly and to use this to manipulate performance. By using a client-server architecture, where all user data is collected, information from all users can be used to improve this estimate. More large-scale research with this type of application can help us better understand how performance affects prolonged play.

This study has several limitations that need to be taken into account. The number of unique participants in our sample was small due to a relatively small sample population. In addition, we found that three factors made it difficult to get students to play. First, voluntary participation might be perceived by students as additional and unnecessary work. Since we are interested in the extent to which the app alone manages to engage students, we did not offer any form of compensation for participating. Second, the app is mainly directed at students that know little about the university, such as starting year students, so our pool of all students in the School of Social Sciences is (purposefully) too broad in the first period of our data collection. Third, the current project, which served as a pilot, was launched in the Spring, which is close to the end of the academic year. To compensate for the low participation rate, we included a second wave of data collection only pertaining to freshmen students and starting the data collection earlier, that is in the Autumn. The relative response rates in the second period are higher compared to the first period, but the number of interested students remains to be a small minority.

Aside from limiting our sample, this also tells us something important about the challenges of gamification projects in natural occurrences of an educational field setting, where it would be inappropriate to compensate students for participation. This calls for more field research that investigates whether and how we can get students to actually participate. The results of this study do show hope: once students started playing, a non-trivial number of students did become engaged, with some students playing for many hours. More than improving the game itself, the challenge could be to have students take that first step. In addition, experimental research into the efficacy of educational games may complement the valuable insights from field studies.

In conclusion, while our findings are preliminary, we are cautiously optimistic about continuing this line of research in the future. Our findings suggest that careful manipulation of game mechanisms can have an impact on sustaining and encouraging play via a gamified application. Despite the limitations of our study, we are encouraged by these findings and hope to continue this line of work in gamifying educational settings.

We thank Loren Roosendaal and IC3D Media for allowing us to use the Knowingo application for the purpose of this research and their support in accessing the API for extracting the log data of our participants. Furthermore, we thank the education office of the Faculty of Social Sciences at the Vrije Universiteit Amsterdam, and in particular Karin Bijker, for enabling us to perform this study.

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Notes

1

The common approach to test for an inverted u-curve would be to fit a quadratic term. However, for our current data, showing a ceiling effect for performance, this would not be a good way to test our hypothesis. The reason is that it would test whether prolonged play decreases if a user performs below his or her average, but even then a user does not perform poorly on average. For reference, we also fitted a quadratic effect, but this did not improve the model (χ2 = 0.346, df = 1, p = ns).

| Barata, G, Gama, S, Jorge, J. (2015) Gamification for smarter learning: tales from the trenches. Smart Learning Environments 2(10): 1–23. Google Scholar |

| Burgers, C, Eden, A, van Engelenburg, M. (2015) How feedback boosts motivation and play in a brain-training game. Computers in Human Behavior 48: 94–103. Google Scholar |

| Cheong, C, Cheong, F, Filippou, J (2013) Quick quiz: a gamified approach for enhancing learning. In: Proceedings of the Pacific Asia conference on information systems (PACIS), Jeju Island, Korea. Google Scholar |

| Chou, Y (2017) A comprehensive list of 90+ gamification studies with ROI stats [Blog post]. Available at: http://yukaichou.com/gamification-examples/gamification-stats-figures/ (accessed 20 October 2017). Google Scholar |

| Connolly, TM, Boyle, EA, MacArthur, E. (2012) A systematic literature review of empirical evidence on computer games and serious games. Computers and Education 59(2): 661–686. Google Scholar ISI |

| Cózar-Gutiérrez, R, Sáez-López, JM (2016) Game-based learning and gamification in initial teacher training in the social sciences: an experiment with MinecraftEdu. International Journal of Educational Technology in Higher Education 13(2): 1–11. Google Scholar |

| Csikszentmihalyi, M (1990) Flow: The Psychology of Optimal Performance. New York: Cambridge University Press. Google Scholar |

| de Vries, H, Kremers, SPJ, Smeets, T. (2008) The effectiveness of tailored feedback and action plans in an intervention addressing multiple health behaviors. American Journal of Health Promotion 22(6): 417–424. Google Scholar SAGE Journals ISI |

| Denny P (2013) The effect of virtual achievements on student engagement. In: Proceedings of the SIGCHI conference on human factors in computing systems, Paris, France, 27 April–2 May 2013, pp.763–772. New York: ACM. Google Scholar |

| Deterding S, Dixon D, Khaled R, et al. (2011) From game design elements to gamefulness: defining gamification. In: Proceedings of the 15th international academic MindTrek conference: envisioning future media environments, Tampere, Finland, 28–30 September 2011, pp.9–15. New York: ACM. Google Scholar |

| Dong T, Dontcheva M, Joseph D, et al. (2012) Discovery-based games for learning software. In: Proceedings of the SIGCHI conference on human factors in computing systems, Austin Texas, USA, 5–10 May, 2012, pp.2083–2086. New York: ACM. Google Scholar |

| Dunlosky, J, Rawson, KA, Marsh, EJ. (2013) Improving students’ learning with effective learning techniques: promising directions from cognitive and educational psychology. Psychological Science in the Public Interest 14(1): 4–58. Google Scholar SAGE Journals ISI |

| Fitz-Walter Z, Tjondronegoro D and Wyeth P (2011) Orientation passport: using gamification to engage university students. In: Proceedings of the 23rd Australian computer-human interaction conference, Canberra, Australia, 28 November–2 December 2011, pp.122–125. New York: ACM. Google Scholar |

| Fu, FL, Su, RC, Yu, SC (2009) Egameflow: a scale to measure learners enjoyment of e-learning games. Computers and Education 52(1): 101–112. Google Scholar ISI |

| Garris, R, Ahlers, R, Driskell, JE (2002) Games, motivation, and learning: a research and practice model. Simulation and Gaming 33(4): 441–467. Google Scholar SAGE Journals |

| Gee, JP (2013) Games for learning. Educational Horizons 91(4): 16–20. Google Scholar SAGE Journals |

| Giora, R, Fein, O, Kronrod, A. (2004) Weapons of mass distraction: optimal innovation and pleasure ratings. Metaphor and Symbol 19(2): 115–141. Google Scholar |

| Hamari J, Koivisto J and Sarsa H (2014) Does gamification work? A literature review of empirical studies on gamification. In: 2014 47th Hawaii international conference on system sciences, Waikoloa Hawaii, USA, 6–9 January 2014, pp.3025–3034. New York: IEEE. Google Scholar |

| Hatala, R, Cook, DA, Zendejas, B. (2014) Feedback for simulation-based procedural skills training: a meta-analysis and critical narrative synthesis. Advances in Health Sciences Education 19(2): 251–272. Google Scholar Medline |

| Heidt, CT, Arbuthnott, KD, Price, HL (2016) The effects of distributed learning on enhanced cognitive interview training. Psychiatry, Psychology and Law 23(1): 47–61. Google Scholar |

| Huotari K and Hamari J (2012) Defining gamification: a service marketing perspective. In: Proceeding of the 16th international academic MindTrek conference, Tampere, Finland, 3–5 October 2012, pp.17–22. New York: ACM. Google Scholar |

| Kapp, KM (2012) The Gamification of Learning and Instruction: Game-based Methods and Strategies for Training and Education. San Francisco, CA: Pfeiffer. Google Scholar |

| Khan, A, Ahmad, FH, Malik, MM (2017) Use of digital game based learning and gamification in secondary school science: the effect on student engagement, learning and gender difference. Education and Information Technologies 22(6): 2767–2804. Google Scholar |

| Kluger, AN, DeNisi, A (1996) The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin 119(2): 254–284. Google Scholar ISI |

| Krebs, P, Prochaska, JO, Rossi, JS (2010) A meta-analysis of computer-tailored interventions for health behavior change. Preventive Medicine 51(3): 214–221. Google Scholar Medline |

| Kroeze, W, Werkman, A, Brug, J (2006) A systematic review of randomized trials on the effectiveness of computer-tailored education on physical activity and dietary behaviors. Annals of Behavioral Medicine 31(3): 205–223. Google Scholar Medline ISI |

| Lee, JJ, Hammer, J (2011) Gamification in education: what, how, why bother? Academic Exchange Quarterly 15(2): 146. Google Scholar |

| Li W, Grossman T and Fitzmaurice G (2012) Gamicad: a gamified tutorial system for first time autocad users. In: Proceedings of the 25th annual ACM symposium on user interface software and technology, Cambridge Massachusetts, USA, 7–10 October 2012, pp.103–112. New York: ACM. Google Scholar |

| Lustria, MLA, Noar, SM, Cortese, J. (2013) A meta-analysis of web-delivered tailored health behavior change interventions. Journal of Health Communication 18(9): 1039–1069. Google Scholar Medline ISI |

| Malone TW (1982) Heuristics for designing enjoyable user interfaces: lessons from computer games. In: Proceedings of the 1982 conference on Human factors in computing systems, Gaithersburg Maryland, USA, 15-17 March 1982, pp.63–68. New York: ACM. Google Scholar |

| Malone, TW, Lepper, MR (1987) Making learning fun: a taxonomy of intrinsic motivations for learning. In: Snow, RE, Farr, MJ (eds) Aptitude, Learning, and Instruction. vol. 3, London, England: Routledge, pp.223–253. Google Scholar |

| Mollick, ER, Rothbard, N (2014) Mandatory fun: consent, gamification and the impact of games at work. The Wharton School Research Paper Series. Epub ahead of print 4 December 2017. DOI: 10.2139/ssrn.2277103. Google Scholar |

| Nah FFH, Zeng Q, Telaprolu VR, et al. (2014) Gamification of education: a review of literature. In: International conference on HCI in business, Heraklion Crete, Greece, 22-27 June 2014, pp.401–409. Switzerland: Springer International Publishing. Google Scholar |

| Neff, R, Fry, J (2009) Periodic prompts and reminders in health promotion and health behavior interventions: systematic review. Journal of Medical Internet Research 11(2): e16. Google Scholar Medline |

| Noble, N, Paul, C, Carey, M. (2015) A randomised trial assessing the acceptability and effectiveness of providing generic versus tailored feedback about health risks for a high need primary care sample. BMC Family Practice 16: 95–103. Google Scholar Medline |

| Rohrer, D (2015) Student instruction should be distributed over long time periods. Educational Psychology Review 27(4): 635–643. Google Scholar |

| Schneider, F, de Vries, H, Candel, M. (2013) Periodic email prompts to re-use an internet-delivered computer-tailored lifestyle program: influence of prompt content and timing. Journal of Medical Internet Research 15(1): e23. Google Scholar Medline |

| Su, CH, Cheng, CH (2015) A mobile gamification learning system for improving the learning motivation and achievements. Journal of Computer Assisted Learning 31(3): 268–286. Google Scholar ISI |

| Sweetser, P, Wyeth, P (2005) Gameflow: a model for evaluating player enjoyment in games. Computers in Entertainment 3(3): 3. Google Scholar |

| Terill, B (2008) My coverage of lobby of the social gaming summit [Blog post]. Available at: www.bretterrill.com/2008/06/my-coverage-of-lobby-of-social-gaming.html (accessed October 12 2017). Google Scholar |

| Wattal, S, Telang, R, Mukhopadhyay, T. (2012) What’s in a “name”? Impact of use of customer information in e-mail advertisements. Information Systems Research 23(3): 679–697. Google Scholar |

| Part of a series on |

| Linguistics |

|---|

| Portal |

Why Study A Foreign Language

The tables below provide a list of foreign languages most frequently taught in American schools and colleges. They reflect the popularity of these languages in terms of the total number of enrolled students in the United States. (Here, a foreign language means any language other than English, and includes American Sign Language.)

- 1Lists

- 2List of top five most commonly learned languages by year

Lists[edit]

Below are the top foreign languages studied in public K-12 schools (i.e., primary and secondary schools). The tables correspond to the 18.5% (some 8.9 million) of all K-12 students in the U.S. (about 49 million) who take foreign-language classes.[1]

K-12[edit]

| Rank | Language | Enrollments | Percentage |

|---|---|---|---|

| 1 | Spanish | 6,418,331 | 72.06% |

| 2 | French | 1,254,243 | 14.08% |

| 3 | German | 395,019 | 4.43% |

| 4 | Latin | 205,158 | 2.30% |

| 5 | Japanese | 72,845 | 0.82% |

| 6 | Italian | 65,058 | 0.73% |

| 7 | Chinese | 59,860 | 0.67% |

| 8 | American Sign Language | 41,579 | 0.46% |

| 9 | Russian | 12,389 | 0.14% |

| Others[2] | 255,825 | 2.87% | |

| Total | 8,907,201 | 100% |

Colleges and universities[edit]

Below are the top foreign languages studied in American institutions of higher education (i.e., colleges and universities), based on fall 2016 enrollments.[3]

| Rank | Language | Enrollments | Percentage |

|---|---|---|---|

| 1 | Spanish | 712,240 | 50.2% |

| 2 | French | 175,667 | 12.4% |

| 3 | American Sign Language | 107,060 | 7.6% |

| 4 | German | 80,594 | 5.7% |

| 5 | Japanese | 68,810 | 4.9% |

| 6 | Italian | 56,743 | 4.0% |

| 7 | Chinese | 53,069 | 3.7% |

| 8 | Arabic | 31,554 | 2.2% |

| 9 | Latin | 24,866 | 1.8% |

| 10 | Russian | 20,353 | 1.4% |

| 11 | Korean | 13,936 | 0.9% |

| 12 | Greek, Ancient | 13,264 | 0.9% |

| 13 | Portuguese | 9,827 | 0.7% |

| 14 | Hebrew, Biblical | 9,587 | 0.7% |

| 15 | Hebrew, Modern | 5,521 | 0.4% |

| Others | 34,830 | 2.4% | |

| Total | 1,417,921 | 100% |

List of top five most commonly learned languages by year[edit]

Grades K-12[edit]

| Year | Languages | Source | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | % | 2 | % | 3 | % | 4 | % | 5 | % | ||

| 2004-2005 | Spanish | 72.9 | French | 15.0 | German | 4.2 | Latin | 2.6 | Japanese | 0.7 | [1] |

| 2007-2008 | Spanish | 72.1 | French | 14.1 | German | 4.4 | Latin | 2.3 | Japanese | 0.8 | [1] |

Higher education[edit]

| Year | Languages | Source | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | % | 2 | % | 3 | % | 4 | % | 5 | % | ||

| 1960 | French | 37.9 | Spanish | 29.7 | German | 24.2 | Russian | 5.1 | Italian | 1.8 | [3] |

| 1968 | French | 34.4 | Spanish | 32.3 | German | 19.2 | Russian | 3.7 | Latin | 3.0 | |

| 1980 | Spanish | 41.0 | French | 26.9 | German | 13.7 | Italian | 3.8 | Latin | 2.7 | |

| 1990 | Spanish | 45.1 | French | 23.0 | German | 11.3 | Italian | 4.2 | Japanese | 3.9 | |

| 1995 | Spanish | 53.2 | French | 18.0 | German | 8.5 | Japanese | 3.9 | Italian | 3.8 | |

| 1998 | Spanish | 55.6 | French | 17.0 | German | 7.6 | Italian | 4.2 | Japanese | 3.7 | |

| 2002 | Spanish | 53.4 | French | 14.5 | German | 6.5 | Italian | 4.6 | American Sign | 4.4 | |

| 2006 | Spanish | 52.2 | French | 13.1 | German | 6.0 | American Sign | 5.1 | Italian | 5.0 | |

| 2009 | Spanish | 51.4 | French | 12.9 | German | 5.7 | American Sign | 5.5 | Italian | 4.8 | |

| 2013 | Spanish | 50.6 | French | 12.7 | American Sign | 7.0 | German | 5.5 | Italian | 4.6 | |

| 2016 | Spanish | 50.2 | French | 12.4 | American Sign | 7.6 | German | 5.7 | Japanese | 4.9 | |

See also[edit]

References[edit]

- ^ abc'Foreign Language Enrollments in K–12 Public Schools'(PDF). American Council on the Teaching of Foreign Languages (ACTFL). February 2011. Retrieved October 17, 2015.

- ^'Others' includes (in order of quantity) Native languages, Korean, Portuguese, Vietnamese, Arabic, Hebrew, Polish, Swahili, Turkish.

- ^ abLooney, Dennis; Lusin, Natalia (February 2018). 'Enrollments in Languages Other Than English in United States Institutions of Higher Education, Summer 2016 and Fall 2016 Preliminary Report'(PDF). Modern Language Association. Retrieved July 2, 2018.

External links[edit]

- Enrollments in languages other than English, Modern Language Association

- Most Popular Foreign Languages, Forbes